The U.S. government’s 2025 AI Action Plan outlines a strategy to accelerate artificial intelligence adoption by reducing regulatory friction, expanding national infrastructure, and promoting U.S.-developed AI technologies globally.

While the plan is aimed at boosting innovation and competitiveness, wider adoption of AI tools and services introduces new risk exposures for companies implementing or developing AI capabilities.

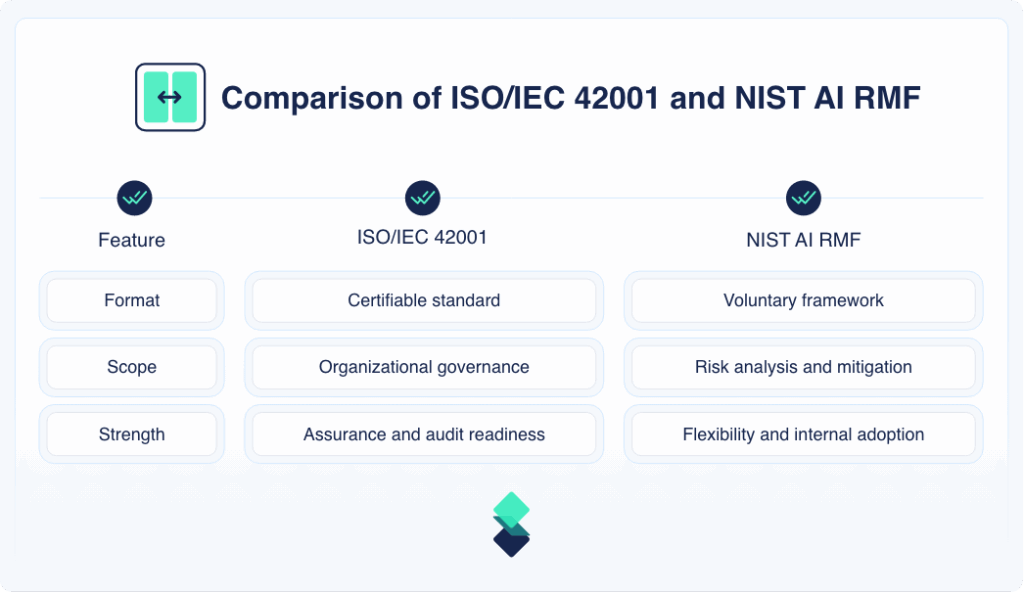

As organizations integrate AI more deeply into their products and operations, the need for reliable security and governance frameworks becomes critical. The ISO/IEC 42001:2023 standard and the NIST AI Risk Management Framework (AI RMF) can help security leaders align their efforts with evolving expectations and demonstrate accountability in a complex policy and regulatory environment.

A Federal Shift Toward Speed and Scale

The U.S. government’s AI strategy is centered on reducing barriers to adoption and positioning the United States as a global leader in AI infrastructure and innovation. The plan is structured around three key pillars:

Pillar I: Accelerating AI Innovation

Federal and state agencies are encouraged to remove perceived regulatory bottlenecks, redirect funding to jurisdictions with business-friendly policies, and support open-source models and datasets. To create downstream demand, the federal government is positioning itself as an early adopter of AI tools.

Pillar II: Building National AI Infrastructure

To address long-term capacity needs for AI workloads, the plan wants to promote measures such as expediting permitting for data center construction, using federal land, strengthening electrical infrastructure, and expanding domestic semiconductor production.

Pillar III: Leading in Global AI Policy and Security

The U.S. will promote exports of the full AI technology stack—hardware, software, and models—to trusted partners, while updating export controls to reduce the risk of sensitive technologies reaching adversaries. Notably, proposed revisions to the NIST AI RMF suggest a move toward “ideological neutrality” in federal procurement.

Taken together, these initiatives aim to lower regulatory friction, increase deployment speed, and create incentives for public and private adoption.

Security Considerations and Emerging Risk Areas

Faster adoption and expanded infrastructure introduce new risks that must be addressed proactively. Among the most pressing for security teams:

- Broader attack surfaces. AI deployments can introduce vulnerabilities, including the exposure of sensitive training data, model inversion attacks, or misconfigured cloud environments hosting AI workloads.

- Supply chain dependencies. The growing use of open-source components and offshore resources creates potential supply chain weaknesses. Organizations must assess provenance, integrity, and maintenance practices of AI inputs and supporting infrastructure.

- AI-enabled threats. Malicious use of AI, such as synthetic phishing, automated exploitation, or model manipulation, requires updated incident response capabilities. The plan acknowledges this by calling for frameworks to support industry-led threat prevention and response.

- Evaluation of frontier models. High-impact models, including those with potential misuse risks (e.g., bioengineering or cybersecurity), may require specialized testing, access controls, and continuous monitoring to mitigate potential harms.

- Export control challenges. Expanding the global reach of U.S. AI tools may increase market share, but it also raises the stakes around enforcement. Insufficient controls could lead to technology transfers that undermine national security or corporate IP.

- Gaps in oversight and workforce capacity. The shift away from social and environmental oversight in federal policy may reduce accountability in areas such as algorithmic fairness. Meanwhile, many security teams are still building internal capacity to address AI-specific risks, with training potentially lagging behind deployment.

Established Governance Frameworks

Security executives seeking to address these risks can look to ISO/IEC 42001 and the NIST AI RMF as practical tools to guide and structure AI risk management and oversight.

ISO/IEC 42001 provides a formal, certifiable framework for managing AI systems across their lifecycle. It emphasizes governance, transparency, risk-based controls, and human oversight—principles that align closely with U.S. policy goals, including those outlined in Executive Order 14110 and OMB’s draft guidance on AI use in federal agencies.

NIST’s framework is non-certifiable but widely referenced. It is organized around four core functions—Map, Measure, Manage, and Govern—and is designed to help organizations identify and mitigate risks to individuals, systems, and organizations posed by AI.

While ISO 42001 establishes organizational controls suitable for audit and certification, the NIST RMF provides an adaptable model for day-to-day risk management. Used together, they offer complementary approaches:

Organizations can use the NIST AI RMF to inform the design of their AI programs and build toward ISO/IEC 42001 certification.

Here’s a summary of how the frameworks align with the U.S. AI Action Plan:

Practical Business Benefits of Certification

Although ISO/IEC 42001 is a voluntary standard, certification may serve as a differentiator in several ways:

- Trust and Marketability: Demonstrating formal AI governance can strengthen trust among customers, investors, and regulators.

- Procurement Readiness: Agencies and enterprise buyers are increasingly requesting evidence of governance; certification supports compliance with internal procurement requirements.

- Cross-Border Operations: As ISO standards are recognized internationally, certification may simplify operations across jurisdictions.

- Operational Risk Reduction: A structured management system can help organizations identify gaps, respond to incidents more effectively, and reduce reputational risk.

While certification is not mandatory, it offers a structured path toward transparency and assurance, especially in high-stakes, high-regulation environments.

Aligning With Policy While Managing Risk

The 2025 AI Action Plan signals a policy environment focused on speed, infrastructure investment, and global competitiveness. But it also places greater responsibility on companies to self-regulate and secure their AI deployments.

Security executives have an opportunity—and a growing obligation—to lead on AI risk governance. By adopting frameworks like ISO/IEC 42001 and the NIST AI RMF, organizations can not only strengthen their internal controls but also position themselves for long-term success in an increasingly complex ecosystem.

Together, the frameworks provide a solid foundation for accountability, resilience, and trust in an AI-driven future. To learn more about the frameworks and AI governance, contact us.